Reality

Buyers Aren’t Predictable

Different shoppers respond to the same change in very different ways.

Cause

Context & Intent

Traffic source, mindset, and motivation shape test outcomes.

Insight

Segment-Aware Testing Wins

Understanding behavior differences leads to stronger conclusions.

One of the most confusing parts of A/B testing is seeing opposite reactions to the same change.

This isn’t a flaw in testing — it’s a reflection of how diverse shopper behavior really is.

🤔 Why the Same Test Gets Different Reactions

Not all visitors arrive with the same mindset.

Some are browsing casually, others are comparison shopping, and some are ready to buy immediately.

When these different mindsets collide inside one A/B test, results can look inconsistent — even though they’re perfectly logical.

🧠 The 5 Factors That Change Shopper Behavior in Tests

1. Traffic Source

Paid traffic, organic search, and returning visitors all have different intent levels.

2. Price Sensitivity

Some shoppers optimize for deals; others prioritize speed, trust, or quality.

3. Decision Stage

Early-stage browsers react differently than shoppers ready to check out.

4. Device Type

Mobile users tend to prefer simplicity; desktop users tolerate more detail.

5. Trust & Familiarity

First-time visitors need reassurance; repeat buyers need efficiency.

🧩 How to Design Better A/B Tests Around Behavior

- Start with one clear hypothesis.

- Focus on high-intent pages first.

- Measure profit and conversion — not clicks.

- Analyze results by segment when possible.

- Let tests run long enough to smooth out noise.

Good A/B testing doesn’t eliminate behavioral differences — it accounts for them.

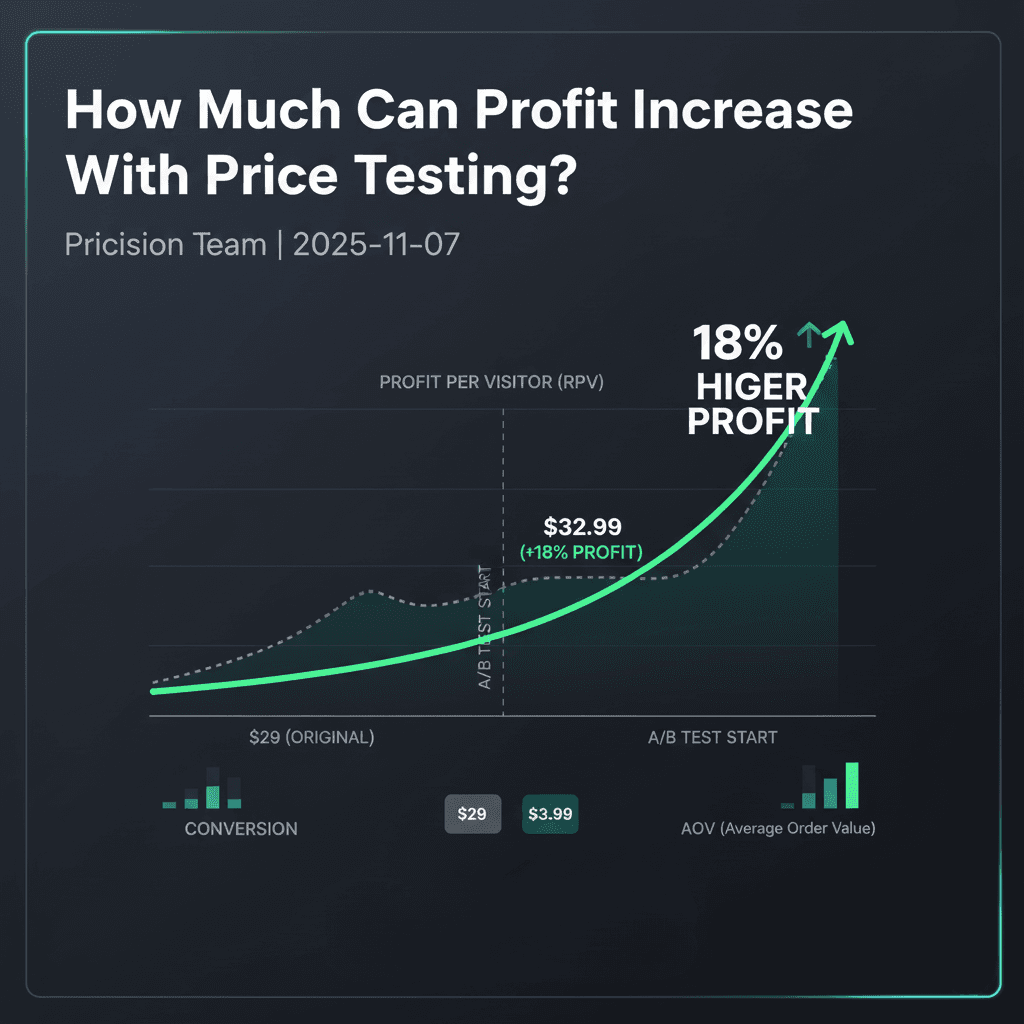

📈 Example: Same Test, Two Behaviors

A Shopify store tested simplified pricing language.

- Returning customers converted 14% higher

- Cold paid traffic showed no change

Overall results were neutral — but segment-level insights revealed a clear win.

⚠️ Common Mistakes When Interpreting A/B Tests

- ❌ Assuming all users behave the same

- ❌ Ending tests too early

- ❌ Ignoring segment-level performance

- ❌ Treating “no winner” as failure

When shoppers behave differently, your A/B tests aren’t broken — they’re teaching you. Learn from the variation, and your experiments will get smarter every time.

Start Free Trial →